What's the actual difference between asking a calculator vs. an LLM what is “1+1”?

Humans can't always tell when we've asked the question we intended to ask.

I met a new acquaintance today - someone hoping to go into AI governance and policy making. I am optimistic about their chances of being a thoughtful, sensible influence in that space, because of their response to a certain question I ask when trying to probe one’s conceptual depth of AI understanding1.

What is the difference between asking a calculator vs. an LLM for the answer to “1+1”, given that you can run an LLM on that exact same calculator?

My acquaintance paused for bit before replying “The difference is you’re asking 2 different questions”.

That’s new. Promising.

Next question, how are they different?

Here, they mentioned calculators running binary arithmetic with logic gates, but defaulted to the oft-heard phrase of LLMs predicting the next token.

Not bad. I would give substantial partial credit for the recognition of two different questions being asked.

This acquaintance mentioned a background in physics and philosophy, with some experience training a small vanilla multilayer perceptron, which probably helped. I am unsure of the current or future makeup of people in AI governance, but if there were to be an exam you needed to pass before you could be allowed to pass or advise on AI policy, then I would definitely include the question above as an initial sanity check.

Sometimes in life, it is not obviously and immediately clear that you’ve asked someone (or something) a different question from the question you intended to ask. You can use the exact same words and symbols to ask seemingly the same question - but even then, you are not guaranteed to have successfully asked the question you meant to ask, as Key and Peele so skilfully reminds us.

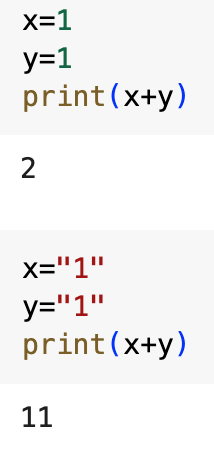

As it so happens, making sure you’ve asked the question you intended to ask is one of the first lessons you learn when programming, usually through an error message if you’re lucky. If you’re unlucky, you don’t get the error message, but you will have still made the error.

Here’s another interesting question to ponder: Can an answer be wrong if you didn’t ask the right question?

The difference between asking what is “1+1” and “1”+”1” is a good shortcut analogy for thinking about the difference between a calculator and an LLM. It is also a good analogy for thinking about the difference between the neurons in your brain “adding” neural spikes together and you consciously adding 1+1.

You should also always have this handy analogy in mind thinking about why LLMs generate the output they do, without prematurely labeling it “stupid”. For example, some of you may remember this particular popular ChatGPT error.

This error might seem stupid to the typical person, who does not spend a large portion of their time interacting with software versioning.

By the way, here is a list of Apple’s Mac OS version numbering. Notice anything interesting?

I don’t know what the proportion of “regular numbers” to “software versioning numbers” exist in the training data of the version of ChatGPT which made the error.

I do wonder though, if you happen to be a human that spends enough of your time running software versioning numbers through your brain, who’s to say you also wouldn’t be confused?

If 1+1 vs “1”+”1” is the shortcut for thinking about calculators vs. LLMs, what’s the long answer?

How exactly is a calculator computing 1+1 differently to an LLM computing 1+1?

The long answer is in this video for calculators:

And these 2 videos for LLMs:

When you have a calculator compute 1+1, the transistors and logic gates in the hardware have electricity running through them in a circuit. This circuit is arranged and wired (traditionally by human design, but now also by deep reinforcement learning) so that the presence (1) or absence (0) of high voltage at the output end of the circuit is logically guaranteed to calculate 1+1. Or any other 8/16/32/64-bit arithmetic your specific calculator or computer chip is designed to handle.

The “thing” that is doing the computing in a calculator is the flow of electricity along the wiring in the calculator’s chip. And the “thing” that guarantees the functional correctness of a calculator’s arithmetic computation are the specific structural placement of transistors which control the flow of electricity. The transistors implement logic gates, which themselves implement the mathematical Laws of Boolean Algebra in the circuit. It’s all very elegantly designed to be precise, at multiple levels of abstraction.

(Side note, the “thing” doing the computing doesn’t have to be the flow of electricity. It could be the flow of water or marbles too. But electricity is faster and less messy.

And the “thing” that guarantees correctness also doesn’t have to be binary computation or boolean logic. Ternary computing and three-valued logic, and other numbered systems exists. But again, binary was—in practice—the fastest and cleanest solution in the real world of analogue signals.

But who knows, if the era of 1-bit LLMs does become a thing, we might start making the hardware to do ternary computing more popular.)

All of this correctly working computation shouldn’t be taken for granted at all, by the way. There is an archetype of human who sees a calculator computing 1+1, and then goes on to dismiss it as “mere” as in “merely a calculator/computer”. But the fact that humanity as a whole figured out how to do all this precision engineering is honestly a marvel to me.

Mistakes can happen at every level of abstraction when making a computing thing. A lot of money and resources go into ensuring there are processes upon processes to handle any mistakes before you get a correctly functioning “mere calculator” or “mere computer”. Just look at the incredible sums of money that is funnelled into TSMC, ASML, IBM, or other related compute manufacturing business.

So that’s what happens when you ask a calculator to do 1+1. What about LLMs?

When you use an LLM like GPT-2-small to do 1+1, GPT-2-small represents the token for “1” as a list of 768 numbers starting with [-0.1087296, -0.1566668, 0.09924976, 0.1326873, 0.07528587, … ]. So when you ask GPT-2-small to compute 1+1 using the same hardware, same electricity, same transistors, same logic gates etc., the circuits you are activating aren’t guaranteed to be computing what you might normally consider “1+1”.

Because you’ve actually asked GPT-2-small to do -0.1087296 * some_number + -0.1566668 * some_other_number + … at least a hundred million times over, with a sprinkling of other operations. Make no mistake though, the correctness guarantees of Boolean Algebra in the logic circuits are still in place. Most everyone are still running their GPTs on digital chips (unless you're in the cool DishBrain team; paper version). It’s just that the logic is guaranteeing that your computer running GPT-2-small does -0.1087296 * some_number + -0.1566668 * some_other_number + … near perfectly.

To be blunt, it’s not that GPT-2-small can’t reliably do arithmetic. It can do arithmetic just fine. It’s just that the typical LLM user has no idea they’ve asked a different question.

From the outside, it superficially looks like you’ve just asked “what is 1+1”, when you type “1+1=” into a calculator and an LLM.

Calculators are relatively simple. Their input options are restricted to a few dozen or so buttons with the logic programmed in a relatively straightforward manner, so most people can easily put in the question they intended to ask correctly. Though even with something as simple as a calculator though, we humans are still extremely capable of accidentally asking an unintended question.

(See this fascinating video for how you could get the wrong value for the reciprocal of 2√3 if you don’t understand how to ask your calculator for the intended value. Perhaps you may have experienced this yourself, when you borrowed a friend’s HP calculator when you were used to the TI series. Or try asking your local high schooler about to take any calculator-permitted exam, I’m sure one of them will have recent examples.)

LLMs on the other hand, are more flexible, and therefore complicated. Which means it is able to answer a larger variety of questions. It also means you can shoot yourself in the foot a lot more easily. Those who laugh or dismiss LLMs on the grounds of being bad at answering “simple” questions, laugh because they do not realize LLMs are answering the question they actually asked, not the question they thought they were asking.

The vast majority of LLM users have no idea what the question they are actually asking, when they put in an input prompt into an LLM - even if they’re the ones growing the models (case in point, the OpenAI 4o sycophancy issue and rollback). Because no one except the mechanistic interpretability researchers can even begin to tell you what’s going on when you ask a decent sized GPT what is “1+1” (some sort of trigonometry, apparently).

This point applies doubly so for commercial LLMs with system prompts that can add thousands of extra words in front of the words you type into the chat bot interface.

So yes.

You are absolutely asking two different questions when you ask a calculator to compute 1+1 than when you ask a LLM to compute 1+1. It is in fact a minor miracle that latest LLMs can be wrangled to do somewhat accurate arithmetic, using such a roundabout way.

I consider it almost on par with the way biological neurons sending electrical spikes every which way can be wrangled to somehow let your brain calculate 36+59 mentally in math class when needed.

The problem lies with us humans, who have this strange quirk of our brains not automatically informing us when we’ve accidentally asked a different question.

Every time I see people who should know better on X or wherever post their opinions on whether LLMs are conscious, whether LLMs can actually reason or do math, whether they are reasoning or mathing similarly to humans or not (because every human over the age of 10 can get 1+1 correct right?), I despair at the depths of philosophical confusion they have about both LLMs and humans.

This confusion does not bode well for AI Safety.

I’ve tried to articulate and clarify one low-level, fundamentally simple, confusion as clearly as I can, because humanity needs to truly grasp what it is AI brains do in comparison to human brains, if we want to remain in control over our existence for the next millennium or so. And the first step is to reduce the dismissive attitudes towards LLMs in places where it is based on inaccurate beliefs.

The worry about humanity’s chances against deep-learning based AI will come as a natural consequence of understanding clearly our comparative (dis)advantages, I hope.

Whether thoughtfulness and sensibility is helpful to policy-advising though, I can’t say.