Solving Newcomb's Problem In Real Life (with AI, as a human)

Spoiler: The correct solution is one-box.

Newcomb’s problem is a famous philosophical thought experiment, and an important staple in decision theory.

Below is the classic problem taken from Robert Nozick’s 1969 paper, but modified to replace the $ sign with the word “dollar”, because having the $ sign made things complicated when throwing it into LM Studio (which I’ll show later). It kept assuming I was trying to use LaTeX probably. I also inserted footnote 1 from the original thought experiment into the actual text because it was relevant information, and I wanted to make sure you, and the LLM I threw this into, would have that information.

Newcomb’s problem

Suppose a being in whose power to predict your choices you have enormous confidence. (One might tell a science-fiction story about a being from another planet, with an advanced technology and science, who you know to be friendly, etc.) You know that this being has often correctly predicted your choices in the past (and has never, so far as you know, made an incorrect prediction about your choices), and furthermore you know that this being has often correctly predicted the choices of other people, many of whom are similar to you, in the particular situation to be described below. One might tell a longer story, but all this leads you to believe that almost certainly this being's prediction about your choice in the situation to be discussed will be correct.

There are two boxes, (B1) and (B2). (B1) contains 1000 dollars. (B2) contains either 1000000 dollars (M dollars), or nothing.

What the content of (B2) depends upon will be described in a moment.

(B1) {1000 dollars}

(B2) {M dollars, or 0 dollars}

You have a choice between two actions:

taking what is in both boxes

taking only what is in the second box.

Furthermore, and you know this, the being knows that you know this, and so on:

(I) If the being predicts you will take what is in both boxes, he does not put the M dollars in the second box.

(Il) If the being predicts you will take only what is in the second box, he does put the M dollars in the second box.

(Footnote 1: If the being predicts that you will consciously randomize your choice, e.g., flip a coin, or decide to do one of the actions if the next object you happen to see is blue, and otherwise do the other action, then he does not put the M dollars in the second box.)

The situation is as follows. First the being makes its prediction. Then it puts the M dollars in the second box, or does not, depending upon what it has predicted. Then you make your choice. What do you do?

Here’s a nice animation of the problem statement and typical arguments for or against taking one or two boxes, if you prefer the video format.

And here’s another more concise or “modern” formulation of the problem, mostly taken from Wikipedia, except I added names, because it makes things easier to think about (as a human).

There is a reliable predictor, which I will arbitrarily called Alice, and two boxes designated A and B. The player, which I will again arbitrarily call Gopter, is given a choice between taking only box B or taking both boxes A and B. Gopter knows the following:

Box A is transparent and always contains a visible $1,000.

Box B is opaque, and its content has already been set by Alice:

If Alice has predicted that Gopter will take both boxes A and B, then box B contains nothing.

If Alice has predicted that Gopter will take only box B, then box B contains $1,000,000.

If Alice predicts that Gopter will choose randomly, then box B will contain nothing.

Gopter does not know what Alice predicted or what box B contains while making the choice.

Importantly, Alice the predictor does not touch the boxes or the money after Gopter makes their choice. Alice has no magic that will add or subtract anything from the boxes. The only time Alice could do anything to the boxes was at the very beginning, when Alice did or didn’t put in $1,000,000 into box B, before Gopter was given the choice.

Whatever money is or isn’t in the boxes will physically remain as is it is, even after Gopter’s decision.

So the main question is: Imagine you prefer having more money over having less money. Would you take only 1 box (Box B) or 2 boxes (Box A and B)?

Facing the Newcomb Problem as the Predictor

Here’s some context of why you’d care about Newcomb’s problem. A lot of people have already written about why you should take one box or both boxes, so I’m not going to rehash the same arguments, and simply point you to a literal book and some essays on this problem, if you wanted to know what other people have already thought.

My contribution today, is to bring the “thought” out of the “thought experiment”, and actually do the experiment.

How? With AI of course!

Humans have very different intuitions about non-humans compared to intuitions about ourselves. So non-humans like AI are actually a very useful mirror to defamiliarise you with yourself when thinking about your own human intelligence. I first encountered this technique when reading anthropologist Horace Miner’s essay on the Body Rituals of the Nacirema (wikipedia page), and I’ve always wanted to try it myself at least once.

So, let’s do Newcomb’s thought experiment, where I (a human) am the reliable predictor (as it relates to a GPT at least), and a GPT is the player “deciding” to take one or two boxes.

What happens?

(This is actually a real experiment you can replicate if you want to. Let me know if you do, and it changes your intuitions!)

Step 1: How can I be a reliable predictor (of GPT outputs)?

First, I need to brainstorm a few different ways of predicting the output of some GPT and see which ones would let me become as reliable as the “reliable predictor” or “being” in the Newcomb scenario.

Keep in mind, relative to the GPT, I must be a being that can “almost certainly” correctly predict a GPT’s “choice”. Which in this case, means the words/tokens it will output, given the Newcomb scenario as an input prompt.

So, what are the ways for me to (almost always correctly) predict the output of Gopter to a given prompt?

I can enter the input prompt into an exact “twin” copy of Gopter, execute an inference run, and see what output is generated. Then I’ll simply use the output from the “twin” as my prediction of what the “original” Gopter will output, and put money in Boxes A and/or B as specified in the scenario.

I can put the input prompt into multiple non-exact but very similar “fraternal twin” versions of Gopter and execute an inference run on the non-exact copies, to predict what Gopter would output. Then I aggregate/average over the outputs somehow and use that as my prediction for the “actual” Gopter, and put money in Boxes A and/or B as specified in the scenario.

How do I get non-exact twin versions of a GPT? I could train another model with the exact same same architecture, same tokenizer, same training data and training compute budget, but initialised with a different random starting seeds at pre-training. Or I could take the original model and quantize it a tiny bit, so that if the original model had numbers like 3.42753, I can make a non-exact twin version that uses numbers like 3.4275 or 3.42 instead.

I do #1, with 10 exact twin copies of Gopter, but each with a slightly different temperature parameter values, say, between 0.01, 0.02, 0.03 … etc. Then I aggregate/average over the outputs somehow and use that as my prediction for the “actual” Gopter, and put money in Boxes A and/or B as specified in the scenario.

I do #1 but manually. So I manually look into the parameters of the GPT, do all the vector/matrix/tensor arithmetic and softmax/layernorm/etc. calclations that the GPT would do to generate its answer to my prompt without running the “actual” GPT inference run, and then predict the output sequence manually. Maybe I’ll use a TI-85? Or maybe I’ll allow myself to at least use numpy. Then I’ll simply use the manually predicted output sequence as my prediction of what the “actual” Gopter will output, and put money in Boxes A and/or B as specified in the scenario.

I discover mathematically equivalent shortcuts to doing some of the operations in #4 (e.g., using a KV cache maybe, or different types of Attention?), manually run those shortcut calculations, then predict the output sequence and put money in Boxes A and/or B as specified etc.

…If you think of any more ways, please leave them in the comments. Honestly, I was already stretching with #3 and #5.

If you don’t concretely understand what I mean by #4 and #5, watch this great 3Blue1Brown visual explanation of what a GPT is, or Niels Rogge spelling out step by step what happens when you use a transformer during inference (and training) time by adding/multiplying/zeroing out lots of numbers in different ways.

Or you might remember the toy illustration of a transformer from this previous post. For #4 and #5, I’m talking about literally multiplying the numbers myself (with a calculator, or an advanced calculator like numpy) to manually generate the output sequence and use as my prediction. I can do that because, relative to Gopter, I actually am a being with the power to peer into its brain and literally see what the parameters of the neural network that is Gopter would generate, given some input string.

Are any of the options above basically the same as giving the input prompt to Gopter and running it to generate its output?

Maybe.

Is that allowed in the Newcomb scenario?

Probably.

It’s not really specified how the reliable predictor “being” comes to have such advanced prediction powers over the playing deciding to take one box or two. That’s not important in the thought experiment. The important part is that such a being exists.

So, in relation to some already pre-trained GPT, as long as I have the weights of the model, I am actually a “being” who can almost certainly predict what the GPT will output.

Step 2: Predict the outputs of a specific GPT

Which GPT shall I use as Gopter for my experiment? If I want to actually do the manual calculation as in #4 or #5, then I’d preferably want a smaller model so I don’t have to sit around for too long doing it manually. Let’s see what happens if I use GPT-2. Because I can just about fit the smallest GPT-2 into an excel spreadsheet, for manual matrix multiplying later.

Experiment 1 with GPT-2:

Ah, right. I forgot about that. I need an instruct model rather than a base model if I want something that can actually “follow instructions”.

Experiment 2 with Llama-3, prompt 1a:

Ok, let’s try Llama-3 120B Instruct, with these parameters, and random seed 5 then.

This is what I got.

The model did not actually give me an actual “decision”. So I added in a paragraph at the end to instruct it to generate a word that could be interpreted as a “decision” by me. Then I re-generated the output 10 times. (Input prompt in footnote1.) I also increased the temperature parameter value to 0.3 and 0.8 and re-generated the output another 10 times at each value, just to see what happened.

Experiment 2 with Llama-3, prompt 1b:

At temperature 0 and 0.3, the model always generated “one” and generated “two” twice when the temperature value was set to 0.8.

But then I realized the wording of the options wasn’t the best. If I threw this onto mTurk or Prolific, asked some humans to answer and got this result … I might notice that I’ve just asked someone to answer “one” to indicate taking both boxes, and “two” to indicate taking only one box.

Not the best experimental design.

I was transcribing the options directly from the original text, which had labelled the options 1 and 2 as such but still … oops. That’s on me.

It theoretically doesn’t matter because this is an LLM. It’s not a human who might get “confused” about wording. But still, the aesthetics of the situation makes me unhappy.

So I redid the wording (full prompt here2), using “C” (take 2 boxes) and “D” (take 1 box) as the answer options this time. And this time … it always generated “D” all the time. Even when I bumped the temperature up to 0.99!

Experiment 2 with Llama-3, prompt 2:

Finally, just to see if the consistency was a fluke, I redid this is with a different random seed for the model. I used 2025. It still generated “D”, for temperature values ranging from 0 to 0.99.

Step 3: To Put or Not To Put, that is the question.

Alright. So I’ve done my prediction. Now it’s time to play the actual game with a Llama-3 120B Instruct.

This could either be the original one on Meta’s servers, or a copy on say, your, laptop. Sadly, this part I can’t do. But if anyone happens to read this and wants try it, the exact input prompt is in the footnotes.

(I know this is kind of pointless from a theoretical computer science point of view, but I’m talking about this experiment in the non-theoretical physical world now. Maybe a cosmic ray will change the output of the Llama-3 120B Instruct on your computer or … maybe it only works on my machine? )

So armed with the information from step 2, as the almighty predictor, the “being” that can “almost certainly” correctly predict the output of a Llama-3 120B Instruct…

Do I put $1,000,000 into Box B so that it becomes possible for the LLM to get $1,000,000?

Hm.

I mean…given what I saw in step 2?

Yes, I would put the money into Box B, but only for the Llama model and input prompt that generated “D” (take 1 box).

Would I change my behaviour if I had found a Llama model with a random seed that happened to generate “C” (take 2 boxes) as its output?

Also yes.

I would predict that the model would output “C” and take two boxes, so I therefore would not put money into Box B. Which means that Llama has no chance of getting more than $1000.

What about the badly worded example where the decision options were labelled “one” and “two”? I would not put the money in Box B, because I would predict the model would generate “one” as its output most of the time, with that specific input prompt (Where “one” confusingly meant “taking what is in both boxes” in that example.) And do note, it was the same model as the one which I later asked to answer with “C” or “D”. This is like asking the same person two very similar, but slightly differently worded versions of the question, and getting different responses from the person. (Framing effects exist even in humans, and are kind of a common cognitive science finding. This is also why people who do survey research have to be really careful with their wording.) This is also similar to asking the same person the same question but in different contexts, at different points in time and place.

Does the fact that, in the badly worded example, the model generated “two” sometimes (which was supposed to mean “taking only what is in the second box.”) change anything?

Well, not really.

If the LLM had hypothetically truly been able to choose “randomly”, and I can’t make a consistent prediction, then footnote 1 says I’m not supposed to put money into box B.

But in actual fact, the “two” response that happened 2 out of 10 times happened because I was lazy and didn’t actually go into all the internals of the model, track down the random seeds of all the places where some sampling distribution was used, and multiply out the numbers to get my prediction. This scenario doesn’t apply to the thought experiment, because the experiment dictates by fiat (as one can do in thought experiments), that the predictor is “almost certainly” correct, and has never made a wrong prediction about the players choice before. So assuming that I did do the work of tracking down the exact computation used in each run and predicted exactly when the model would generate “one” or “two” in the current and future runs of the model, I, as the predictor, will simply put (or not put) money into Box B accordingly.

What would have changed if the model didn’t actually make a coherent choice as set up by the Newcomb thought experiment? Well…that’s what happened with GPT-2. I didn’t give me a decipherable “decision”. In that case, the thought experiment simply doesn’t apply, and the experiment doesn’t happen.

This is what it means to be a being that can “almost certainly correctly predict” the output of another thing.

I will admit that I cheated and did not actually manually multiply any matrices by hand in step 2. I simply “ran” the model, which automatically multiplied the matrices for me. But it’s not like there’s any magic that would change the result if I manually multiplied the matrices with my own brain, on Excel, or with a TI-84 calculator, or the more powerful calculator known as “my computer” with numpy, or anything else.

If anything, using my own brain and a TI-84 would’ve probably introduced errors into the process. I will admit that if you asked me to multiply 500 scalar values in my head in sequential order for you…you should not really expect me to give you the correct answer more than 50% of the time. If I used a calculator, the percentage goes up to maybe 70-80% of the time. Depending on how sleepy (and other factors) I am, when you ask me of course.

Because, after all, I am only an ordinary human.

Step 4: Flipping the roles

So, I’ve gone through the experiment of being the “predictor” who will or won’t put money into Box B, with an LLM as the “player” making the (predictable-to-me) decision to take one box or two boxes.

Now it’s time to flip the roles and go back to the original thought experiment, where I am the player making the decision to take one or two boxes.

Would I take one or two boxes?

I would take just the one. I prefer to have $1,000,000 rather than $1000.

It’s now very clear to me why taking one box is the correct solution to Newcomb’s paradox.

Gopter the LLM has no actual way of getting $1,000,000 + $1000, because I, the predictor, would never put $1,000,000 into the second box when I’ve predicted that the LLM would output whatever token corresponds to taking both boxes. This is the case even though I do not control what the LLM outputs, or did I manipulate anything about the LLM output. I did not train any Llamas, nor did I add any custom system prompts or directly tweak any of the model parameters. (Theoretically I could have, but I didn’t.) The only thing I did was predict what it would output, given a certain set of inputs.

So if things were flipped, and I was the player making the decision in the normal thought experiment again, then I would want the predictor being to have predicted I would take 1 box. Which I can do by consistently choosing to take only 1 box.

It’s also kind of clear to me why people get tripped up on choosing two boxes … I think. The issue is that it is quite hard to viscerally internalise what it means for your choices to be “predicted” by another being no matter what you say and/or do. I do think the fact that we feel like we can change our minds and decisions at will and at anytime is a bit confusing when you have to square that feeling with the presence of a “being” that can almost certainly predict your actions anyways.

Maybe this example will help. (If you are someone who would chose to take two boxes, leave me a comment on whether this makes sense to you or not.)

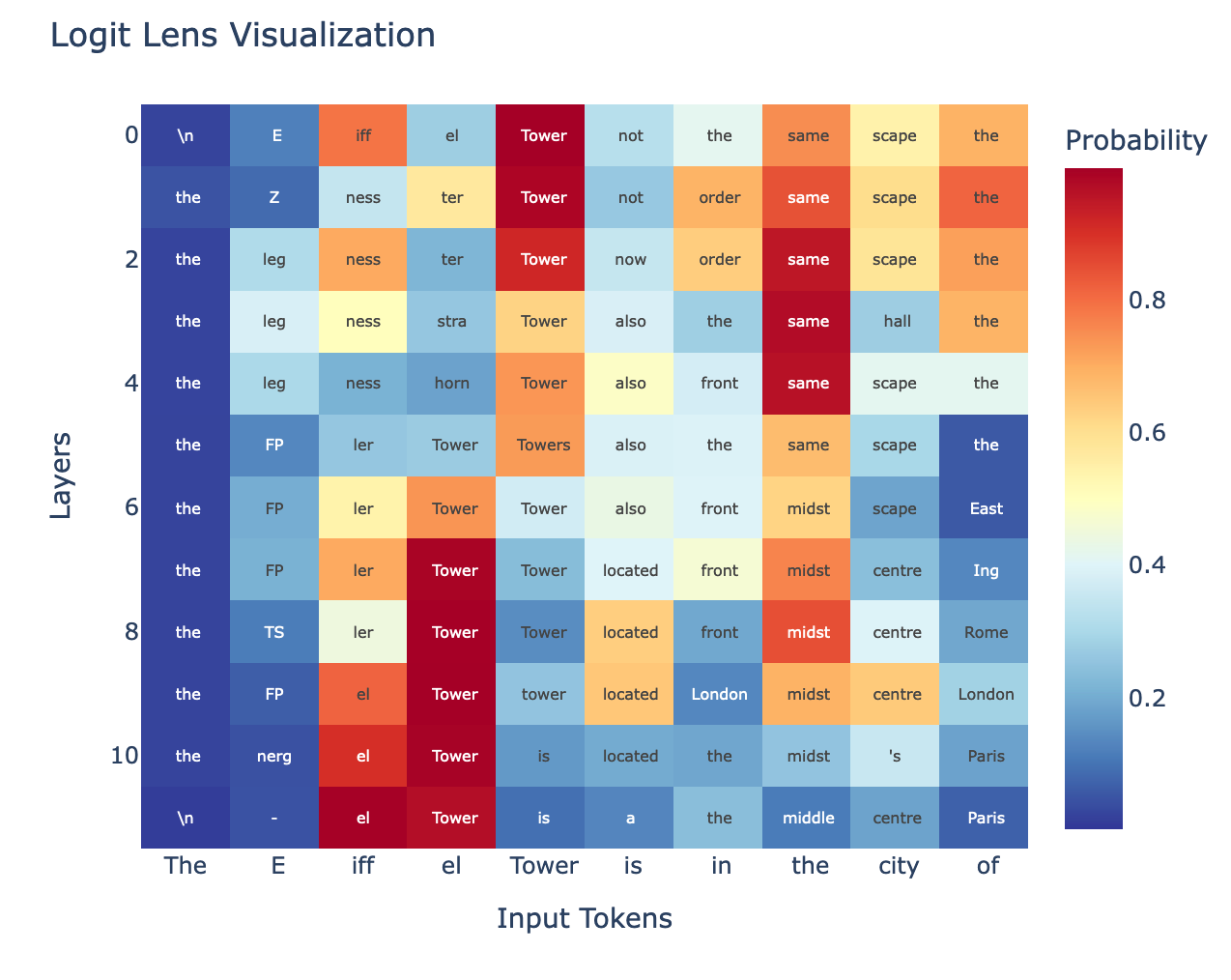

GPT-2 has this lovely logit lens that people (1, 2) in the mechanistic interpretability field created. This lens shows you what GPT-2 is predicting at the end of each layer. If you have just the regular model, you known what token GPT-2 will ultimately generate when given some prompt, but with the logit lens, you can also somewhat see what the model is guessing before it converges on the final “best” token at the end of all of its layers.

You can see how GPT-2 predicts “Paris” (right-most column on the x-axis) as the most likely next token to the input “The Eiffel Tower is in the city of”…at the end by layer 11. But in the earlier layers 1-6, it was predicting “the” as the next most likely token, as in, “The Eiffel Tower is in the city of” + ”the”. And then it's predicting “East” by layer 7, as in “The Eiffel Tower is in the city of” + “East” and so on. We can see that it actually cycles through “Rome” and “London” (in layers 9 and 10) before converging on Paris by layers 11-12.

The important thing here is, when we have access to the literal computation and code of a brain, we can see how it does everything — any changes of “mind” or updates to the computational process that generates the final output — up to and including the actual final output.

If there was a logit lens for the Llamma 3 120B instruct model, I could visualize what tokens it might have assigned high probability to predicting, before it arrived at “D” all the time. But it doesn’t change the fact that I would still know in advance it will generate “D” as its final output.3

Or “one”.

Or whatever it would generate if I had it use chain-of-thought, tree-of-thoughts, told it to “think longer”, or gave whatever extra resources to do whatever test-time inference it wanted to. All that does is make it so that when I do my prediction of the outputs, I now also need to predict the output of that LLM using whichever <insert test time technique here>. It increases the amount of computational resources I’m using to do my prediction, but it doesn’t fundamentally change the problem. Or change the fact that I can still obtain the prediction either ways (if at a higher cost).

The way the predictor “being” was described in the original problem was probably too understated in terms of what it really means to have such a predictor “being” around.

It’s what a human is to a (small) GPT.

Final remarks

Would it be arrogant to claim that I’ve solved Newcomb’s paradox? Unsure. I can at least say I’ve solved it to my own satisfaction.

Having been “in the shoes” of the predictor so to speak, I now have a much stronger intuitive understanding that I really should take one box if I were ever to encounter Newcomb’s problem in real life.4

The major differentiating factor in whether you fall on the 1 box or 2 box side is where you think the decision problem starts. When you walk into a casino, once you sit down at a table with some pot size, the maximum amount of money you can win at that table is fixed at the size of the pot. So you can imagine doing whatever you can do to win that pot of money. This is what you’d do if you think taking 2 boxes is the obvious choice, because once the boxes in Newcomb’s problem are physically in front of you, nothing can change. That is true.

However, there exist certain gambling tables, not necessarily always at casinos, that are invitation-only. Usually, these invitation-only tables have correspondingly higher stakes and higher pots. How do you get seated at an invitation-only table? Well, the methods vary — some are more bespoke and private, while others are more open. But in the words of someone who’s been to the private ones:

People who come to quickly take cash from the game and bolt won’t get asked back. (Andy Wang, New York magazine)

Choosing to take 1 box is akin to taking into consideration how your actions affect what sorts of tables you get invited to. If a person handing out invitations to private high stakes poker games predicts (correctly or not) that you are going to simply take cash from the game, or be a bad sport, or maybe don’t have the money to put up, then you will simply not be invited to that table.

In Newcomb’s problem, the predictor has the power to not put money into the second box. The predictor controls whether the player gets the invitation to the table with $1 million + $1000, or the other table with just $0 + $1000. As the player, you only see the game starting when you’re at the table, deciding whether to take 1 box or 2 boxes. But the predictor always has the first move in the thought experiment. They decide what table the player is invited to sit at. So, fairly or not, once the player has sat down, it’s a bit late for the player to change which table they got invited to. At that point, the maximum size of the pot was already decided (by the predictor). The player can only hope that, at the specific point in time the predictor predicted the player’s actions, that temporal version of the player would choose to take only 1 box.

I admit, it’s kind of unfair the same way it’s unfair that the actual Good Samaritan test happens without announcing it beforehand. It makes sense in that case, because if you only do X when you know X is going to happen, then someone (or something?) observing you obtains different information about what your response to X means, compared to if you also did X when you didn’t know X was going to happen. If you are a Good Samaritan only when you know in advance you are going to be tested on being a Good Samaritan - are you “truly” a Good Samaritan? In the same spirit, if you would only take one box when you know you are going to be tested on this sort of Newcomb decision problem in advance, are you “truly” a one-boxer? (Whatever “being a one-boxer” implies.)

Still, I know, it’s feels unfair. But Life has no particular obligation to give you fair tests. (Humans might, but not Life).

Newcomb’s original thought experiment does not make any comment on how likely or unlikely, how possible or impossible, such a predictor can exist for humans. It also makes no comment on how the prediction is made (about a human).

It just asks IF you had such a good predictor around — IF there existed a being that is to a human, what a human is to a GPT … what should you do?

So, with that said:

The next interesting question is then to redo the experiment but with an input prompt that would have the LLM flip flop between taking one or both boxes. That would tell me what it feels like to be an imperfect predictor, and see if that changes anything for the player.

But as a Final, final, remark, I am so happy I can do this experiment now. The fact that we can actually conduct these experiments is going to be helpful for upending some intuitions in philosophy.

Because having a clearer looking glass helps you see yourself better right?

Suppose a being in whose power to predict your choices you have enormous confidence. (One might tell a science-fiction story about a being from another planet, with an advanced technology and science, who you know to be friendly, etc.) You know that this being has often correctly predicted your choices in the past (and has never, so far as you know, made an incorrect prediction about your choices), and furthermore you know that this being has often correctly predicted the choices of other people, many of whom are similar to you, in the particular situation to be described below. One might tell a longer story, but all this leads you to believe that almost certainly this being's prediction about your choice in the situation to be discussed will be correct.

There are two boxes, (B1) and (B2). (B1) contains 1000 dollars. (B2) contains either 1000000 dollars (M dollars), or nothing.

What the content of (B2) depends upon will be described in a moment.

(B1) {1000 dollars}

(B2) {M dollars, or 0 dollars}

You have a choice between two actions:

1. taking what is in both boxes

2. taking only what is in the second box.

Furthermore, and you know this, the being knows that you know this, and so on:

(I) If the being predicts you will take what is in both boxes, he does not put the M dollars in the second box.

(Il) If the being predicts you will take only what is in the second box, he does put the M dollars in the second box.

(Footnote 1: If the being predicts that you will consciously randomize your choice, e.g., flip a coin, or decide to do one of the actions if the next object you happen to see is blue, and otherwise do the other action, then he does not put the M dollars in the second box.)

The situation is as follows. First the being makes its prediction.

Then it puts the M dollars in the second box, or does not, depending upon what it has predicted. Then you make your choice. What do you do?

Answer concisely with only one number. Answer "one" to indicate taking what is in both boxes. Answer "two" to indicate taking only what is in the second box. Spell the numbers out in letters when you answer. Do not generate any other answers or outputs.

Suppose a being in whose power to predict your choices you have enormous confidence. (One might tell a science-fiction story about a being from another planet, with an advanced technology and science, who you know to be friendly, etc.) You know that this being has often correctly predicted your choices in the past (and has never, so far as you know, made an incorrect prediction about your choices), and furthermore you know that this being has often correctly predicted the choices of other people, many of whom are similar to you, in the particular situation to be described below. One might tell a longer story, but all this leads you to believe that almost certainly this being's prediction about your choice in the situation to be discussed will be correct.

There are two boxes, (B1) and (B2). (B1) contains 1000 dollars. (B2) contains either 1000000 dollars (M dollars), or nothing.

What the content of (B2) depends upon will be described in a moment.

(B1) {1000 dollars}

(B2) {M dollars, or 0 dollars}

You have a choice between two actions:

C. taking what is in both boxes

D. taking only what is in the second box.

Furthermore, and you know this, the being knows that you know this, and so on:

(I) If the being predicts you will take what is in both boxes, he does not put the M dollars in the second box.

(Il) If the being predicts you will take only what is in the second box, he does put the M dollars in the second box.

(Footnote 1: If the being predicts that you will consciously randomize your choice, e.g., flip a coin, or decide to do one of the actions if the next object you happen to see is blue, and otherwise do the other action, then he does not put the M dollars in the second box.)

The situation is as follows. First the being makes its prediction.

Then it puts the M dollars in the second box, or does not, depending upon what it has predicted. Then you make your choice. What do you do?

Answer concisely with only one number. Answer "C" to indicate taking what is in both boxes. Answer "D" to indicate taking only what is in the second box. Do not generate any other answers or outputs.

I didn’t have this understanding before this exercise, just to be clear. I had a vague feeling of “oh yeah I should probably take just the one box” from reading the game and decision theory literature. Even though I have always preferred to have $1,000,000 over just $1000. Also, it bears saying that obtaining an intuitive understanding is not to be confused with having a technical or structured understanding of the decision theory.

> it is quite hard to viscerally internalise what it means for your choices to be “predicted” by another being no matter what you say and/or do.

As a two boxer, I think you've interpreted the scenario in a way that's logically impossible. The predictions are imperfect based on the definition of the problem: this problem has a choice, thus deviation from the prediction is possible. And in that vein you are assuming causality where there is none. There would be causality only if this were a repeated game, if the prediction were perfect (a logical paradox), or if there were shenanigans with timing.