For reasons outlined in my previous post, I have been wondering about how to explain chess like it’s a word game without any reference to visual or spatial imagery (which is different to visual imagination, by the way).

Here’s an attempt, following somewhat the rules laid out here, the notation parts informed by here and sometimes here, with some changes in notation as needed to convey the normal rules of chess. It’s not a complete description of the game (see: pawns), but it’s something to start from in case anyone wanted to attempt thought experiment 2 as the Teacher and needed a starting point.

Game explanation

Setup and Objective

Chess is a two-player, sequential, strategy word game.

The goal of the game is to force your opponents into a state called “checkmate”, where your opponent's “King” has no remaining valid moves the next turn, and cannot avoid permanent deletion (i.e., “Capture”, see below).

The game can also end when one player resigns or when there is a draw.

Rules

Each player controls 16 characters, sometimes also known as “pieces”

1 “King” abbreviated as “K”

1 “Queen” abbreviated as “Q”

2 “Rooks” abbreviated as “R”,

2 “Bishops” abbreviated as “B”

2 “Knights” abbreviated as “N”

8 “Pawns” which usually have no abbreviation in standard chess notation, but is abbreviated as P for now.

The game starts with a 64 word sentence, and is played by one player modifying one word in that sentence on their turn to generate the next sentence.

The starting player is “Alice”, also sometimes known as “White”. The other player who goes next is “Bob” or “Black”.

Each word in the sentence is made up of a lowercase letter and a number combination. The letters range from a-h and letters from 1-8. The lowercase letter always precedes the number. There are no words beyond the specified range of letters and numbers.

Each player’s piece starts out being appended to the beginning of a specific word in the sentence.

The starting 64-word sentence of every game is always:

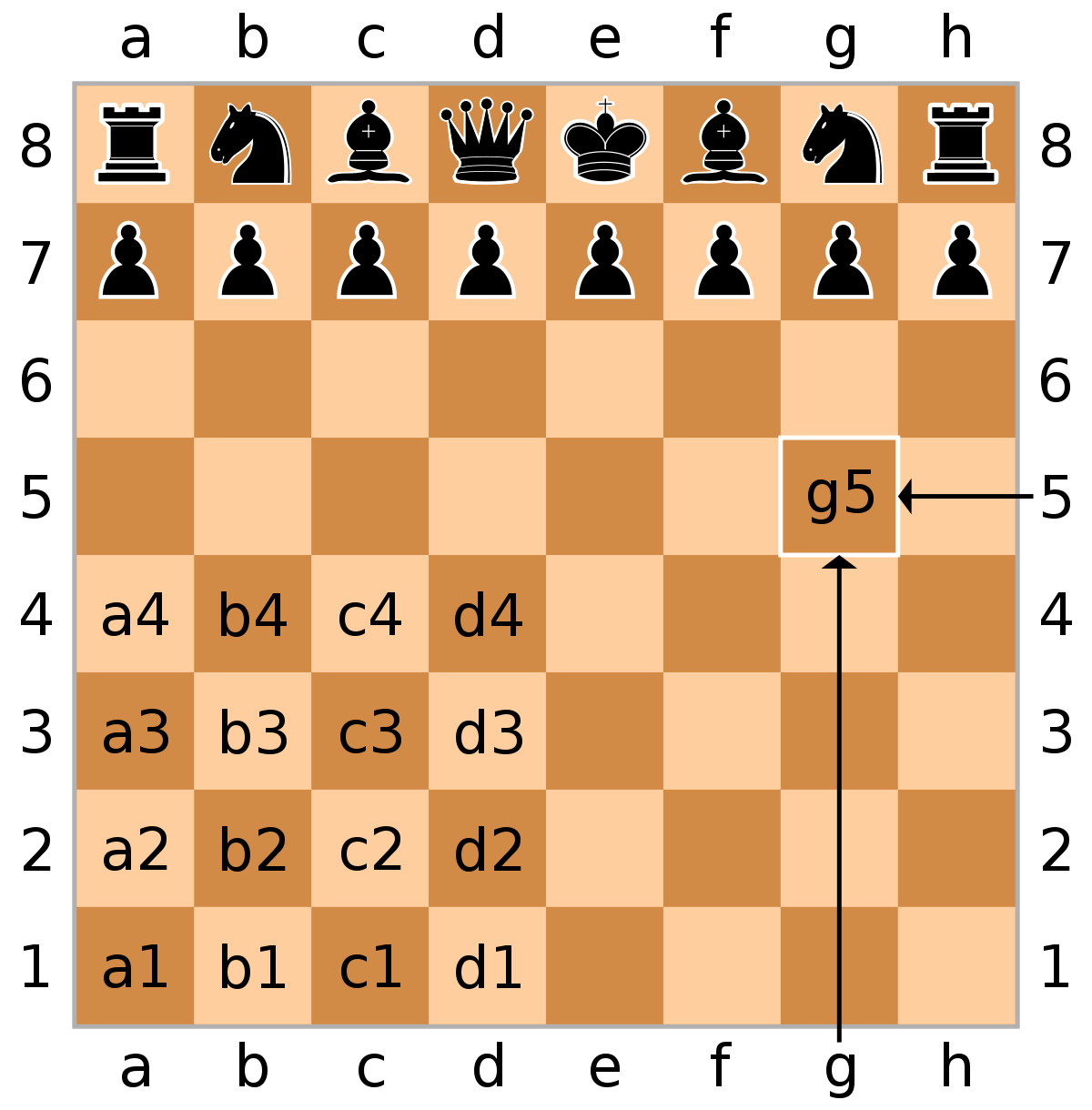

Ra1, Nb1, Bc1, Kd1, Qe1, Bf1, Ng1, Rh1, Pa2, Pb2, Pc2, Pd2, Pe2, Pf2, Pg2, Ph2, a3, b3, c3, d3, e3, f3, g3, h3, a4, b4, c4, d4, e4, f4, g4, h4, a5, b5, c5, d5, e5, f5, g5, h5, a6, b6, c6, d6, e6, f6, g6, h6,Pa7, Pb7, Pc7, Pd7, Pe7, Pf7, Pg7, Ph7, Ra8, Nb8, Bc8, Kd8, Qe8, Bf8, Ng8, Rh8.

Because this is the starting sentence for all games, it is assumed, and therefore not usually written down on a game record.

In a typical game record, a turn is generally written in one of two ways; in two columns, as Alice/Bob pairs, preceded by the move number and a period:

1. Pe4 Pe5

2. Nf3 Nc6

3. Bb5 Pa6

or horizontally:

1. Pe4 Pe5 2. Nf3 Nc6 3. Bb5 Pa6

In the starting sentence, Alice controls the 16 pieces appended to words with the number “1” and “2” in it. Bob controls the pieces with “7” and “8” in the starting word.

The next sentence is generated by the current player un-appending one piece from its current word and appending it to another allowed word.

Game play

Players take turns to generate new sentences one after another, sequentially.

Players cannot skip a turn in this game. On each turn, the player must make one and only one modification to the sentence. If the player cannot make an allowed modification to the sentence, they lose or draw the game.

Pieces cannot be appended to multiple words in the same sentence

Multiple pieces cannot be appended to the same word in the same sentence.

Pieces can not un-append and then re-append to the same word in the same sentence.

Sentences may not be edited by either player once the turn ends.

“Capture”: A piece can remove another piece from the sentence by appending itself to a word that already has an existing piece appended to it. Once a piece is “captured” it is permanently deleted from all following sentences. This is denoted by adding an “x” immediately before the destination word.

Words that have no piece appended to it are sometimes said to be “unoccupied”, “vacant” or “free”.

Allowed word modifications:

Each piece has different ways it is allowed to be un-appended and re-appended to words in the sentence.

None of the pieces may increment/decrement through a word that already has another piece appended to it while it is incrementing and decrementing through its allowed letter-number combination rules, except the Knight.

None of the pieces are allowed to increment or decrement past letter-number combinations beyond a-h and 1-8, and there is no looping (i.e., the number after 8 is not 1, it simply does not exist).

The Rook is allowed to un-append and re-append to words that have the same letter as its current word, OR words that have the same number as its current word, but not both at the same time.

E.g., if a Rook is at d5, it is allowed to increment/decrement to dX, or Y5, where X is any number 1-8, and Y is any letter a-h (except d5, because it is already appended to it currently)

The Bishop is allowed to un-append and re-append to words where the letter increments or decrements by some number n or -n, and separately, the number increments or decrements by n or -n from its current word.

E.g., if a bishop’s current word is d5, and n=2, it can append to (d+2)(5+2), so f7. It can also append to f3 because (d+2)(5-2), b7 because (d-2)(5+2), and b3 because (d-2)(5-2).

The Queen is allowed to un-append and re-append to words that the Rook and Bishop are allowed to, following each of their respective rules.

The King is allowed to un-append and re-append to words with any letter and number combination that a Queen is allowed to, but only within a 1 letter or number increment or decrement away from its current word.

E.g., if a King is at f5, it is allowed to go to f6, f4, e5, g5, e6, g6, e4 and g4.

The Knight is allowed to un-append and re-append to words where the letter increments or decrements by 2 then the number increments or decrements by 1, OR the number increments or decrements by 2, then the letter increments or decrements by 1.

Things I learned by thinking of Chess as a Word game

No wonder ChatGPT doesn’t play chess well (for now?). This was a really weird exercise, but I have a little more … “appreciation” of the difficulties a GPT’s associative network might face.

Side note: That gpt-3.5-turbo-instruct seems to play chess better than the RLHF’d model, and other observed differences (e.g., Fig 8 on page 12) between a base-GPT and a base + instruction-fine-tune + RLHF’d GPT makes me wonder about the trade-offs we’re making with human childhoods and schooling.

If anyone knows about good human / psychological / developmental research correlating “ability to follow instructions” and “chess ability”, or “ability to please others” and “chess ability”, please point me in the right direction!

Note for humans: If you’re trying to be a GPT as in thought experiment 1, these rules shouldn’t be treated like rules you process explicitly with your system 2 mode. Attempting thought experiment 1 means learning implicitly without having explicit access to the rules of the game. You’d have to learn it the way you learned your native languages in childhood. Without knowing the explicit grammar rules of your native languages.

This is why I expect GPTs will be able to play chess before they have any idea what chess might even be. Like native languages, not explicitly knowing the rules never stopped any one from acquiring certain aspects of the language nor using the language. (It’s interesting to compare which aspects of language you can acquire implicitly or not, and whether it’s the same across humans and GPTs. But that’s a different post.)

Specifically, the scientific evidence that there’s a difference between learning language rules or grammar implicitly vs explicitly comes from a “large body of clinical literature … from the artificial grammar learning (AGL) paradigm, where patients learned to decide whether the string of letters followed grammatical rules or not. Healthy participants were found to learn to categorize grammatical and ungrammatical strings without being able to verbalize the grammatical rules. Evidence from amnesic patients points toward implicit learning being intact in patients even though their explicit learning was severely impaired (Knowlton et al., 1992; Knowlton and Squire, 1996; Gooding et al., 2000; Van Tilborg et al., 2011).” (from Salavia, Shukla, Bapi, 2016).

It seems like training ANNs in general, or GPTs in particular (with linguistic text input) using deep-learning style optimisation gets us fuzzy programs that seems to behave similarly to human “implicit” learning. Traditional programming with if statements, while loops, and switch statements gets us non-fuzzy, rule-based algorithmic programs that seems to behave like “explicit” learning. Once we figure out how to put two and two together … what does that give us?

If you consider “grammar” as the rules of a language, then “chess rules” are the grammar of chess. We know that GPTs can become syntactically fluent (around OpenAI’s GPT-2 ish) before becoming semantically passable (GPT-3 ish), and pragmatically not terrible (GPT-3.5+)? Can we use this progression to figure out which aspects of chess a certain level of GPT would or wouldn’t be good at? What’s the equivalent of semantics and pragmatics in chess?

I am very curious how a text-only GPT would infer and distinguish between legal and illegal moves from textual game records only, much less board positions. If something like the above description of chess in this post was never in the training data before, and you, a human, were learning chess from intuitively (system 1 style) trying to look at pure associations between things like “1. d4 Nf6 2. c4 e6 3. Nf3 d5 4. Nc3 Be7 5. Bf4 O-O 6. Nb5 $2 Na6 $9 7. e3 c6 $6 8. Nc3 Nc7 9. Rc1 $6 b6 10. Qb3 Ba6 11. Qa4 $6 Qd7 $4 12. Bxc7 $1 Qxc7” … what cues do you use to tell legal moves from illegal ones? Are there game records out there with illegal moves and some marker of illegality? And I wonder what it’d be like to have a sense for when an “x” or “+” or “-” will sometimes pop up in weird places? Also, where are the pawns?

Pawns. Oh my goodness, they were so complicated to describe, I decided to skip them in case I got something terribly wrong (was En Passant always a thing?).

Also, I’m left wondering how a hypothetical GPT that was learning purely algebraic chess notation would notice that individual pawns even exist if any move they make just gets written down as the square coordinate?

Same question applies to Rooks, and Knights, but at least there are only 2 of them.

OpenAI’s actual ChatGPT or GPT-3 or GPT-4 trained on the internet probably has access to more than just algebraic chess notation (though I’m not sure if that’s helpful or hurting the association learning). I’d still expect GPT-3/4 chess to be most weird with pawns considering the weirdness of chess notation as training data. I don’t doubt that a GPT can learn the associations, but I would expect it to make mistakes with pawns in a way that’s similar to how human children learning English sometimes incorrectly learn to say “goed” and “doed” along the way, more than with queens, for example. (And I’ve been saying “a GPT” and not just “a transformer” because it’s not just the architecture I’m particularly curious about here. It’s the training input + architecture combo I’m wondering about.)

Obvious in hindsight, but color is extremely irrelevant in this version since it’s not used to describe the board state. The only important thing color does when you don’t think of chess (or Go, or Othello, or Checkers for that matter) as a board game is to distinguish which player you are. So black and white could be Alice and Bob as far as it matters to a GPT.

Symmetry is another one of those representations that seems obvious when you see chess as a board game, but sort of a minor-ish detail when you think of it as a word game. It’s kind of hard to convey that the “board” is symmetric without invoking visuospatial imagery, and there’s no real need to spell that out. It only really helps when talking about pawns and their allowed moves, since they are the only pieces that can move in only 1 direction. (Even then I think it could be skipped, if you are willing to write a longer enumerated description of how a pawn moves). So I wonder if a GPT would first naturally learn to represent it’s chess moves mainly from one player’s point of view rather than encode things symmetrically from the get go? I heard there was some mechanistic interpretibility work on Othello that might have looked into this. I’ll have to hunt it down and see whether this hypothesis checks out.

It was kind of hard to find a way to convey the continuity of a board in word form. It was extremely hard to convey the concept of moving a piece through another piece without reference to spatial imagery. I’m not really confident I succeeded at this. Explaining “moving” as incrementing and decrementing through letter-number combinations feels borderline ok and only works if someone (or something) already intuitively gets that ABCs and 123s are ordered in a certain way, and it’s not “a, c, d, b” or “1, 3, 2”.

There’s a lot of assumptions that don’t usually need to be spelled out if you think of chess as a board game.

For example, because of the way a regular board is sized, it’s pretty intuitively conveyed that you can only put one piece on one square because usually you can’t fit more than one piece on one square comfortably. (What happens if you make a chess board that has huge squares compared to its pieces?)

It’s also pretty extraneous to say that when you move a piece, that piece is no longer at the previous location it was at. Moving the piece in space inherently removes that piece from its original location. You can’t duplicate physical chess pieces as easily as you can duplicate computer bits or letters on a screen.

I found it easiest to describe the allowed moves for pieces in the order of Rook and Bishop, then Queen and King, because I found it easier (and shorter) to use the instructions for e.g., the Queen to describe the King’s move set. I could’ve also just enumerated the moves for the King like I did in the example.

I wonder if a GPT would learn the move set in a similar order? I sort of doubt it, because that would require some look ahead or “top-down” processing capability, but it would be cool to find a principled way to predict the order in which a GPT (on average) learns the allowed set of moves for chess pieces and see if this (on average) resembles the order in which humans do it or not.

What even is the order people normally learn chess piece moves in? Is there a preferred one? An easier/harder one?

I also wonder if a GPT would learn the move set in a similar order to a MuZero? (Complimentary and complementary psychology experiment idea: if you “teach” chess to a person by letting them move a piece and only giving them feedback on whether it was “allowed”/”legal” or not, like MuZero, which piece’s move set is the easiest for the person to pick up? Which piece’s move set do they tend to understand consistently first? Which piece’s move set confuses them the most? What order will the person come to understand the allowed moves of the various pieces? Is this order comparable to GPT or MuZero holding other significant variables equal, like making sure this person had absolutely zero idea what chess was beforehand?)

I actually described the King move set first because I was following the order I introduced the pieces before I rewrote the King move set as “Queen but with constraints”. The Queen could also have been described as “King but with fewer constraints”. But since I described the Rook and Bishop without referencing any constraints on the number of steps they could increment/decrement step, it was simply to describe the Queen first as “Queen = Bishop + Rook” and then say King = “Queen but 1 step only”, rather than say “King = Bishop + Rook but 1 step only” and then “Queen = King but any number of steps”. But from an associative learning standpoint, it might be easier to notice that the King only ever moves within 1 letter-number increment/decrement, than notice that the Queen does the same but for a variable n). How do you find out?

I’m really excited for any future mechanistic interpretibility research on chess playing GPTs. I wonder if the learned representations of move sets will look anything like the ones I described?